frutos do mar

frutos do mar by Armada (2014)

Frutos do mar is a demoscene project released at the Assembly 2014 event. It’s somewhat loose collection of different experiments, with a little bit of effort to combine the pieces to a semi-coherent production. Like majority of my demoscene projects, the actual product has been put together over the course of just a few days, with the goal of taking part in the demo competition at the event, meaning there was a strict deadline to get the product finished. That doesn’t mean that everything you see has been created during that time. I’ll document here some details.

First, about the underlying tech. While it’s fun to sometimes make productions like this, other things tend to take the priority. So it’s nice to build on a foundation which provides boilerplate code. I have often worked together with friends who made the engine and I worked on effects and combining things. In recent years I’ve also sometimes used some open source engine. This is the first demo I have made using the Unity engine. As this is written 9 months later, I don’t remember which exact version I used back then, but it was either 4.3 or 4.5.

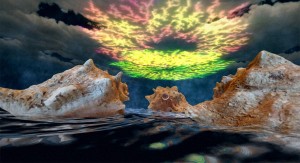

However, Unity isn’t the only tool which is used as a leverage. The 3D models you see in this demo are almost exclusively made with photogrammetry. That is, models are automatically generated from tens of photographs of the same target. I’ve used Autodesk’s various software iterations for the task (Project Photofly, 123D Catch, Project Memento; all abandoned now). In addition to that, some of the backgrounds are made with Microsoft’s Photosynth. The actual photographs have been taken in several different locations over the years – this demo features objects and locations from at least from Belgium, Sicily and Portugal. While Autodesk is doing very good job with their software, there’s still naturally many failed tries for each successful 3D model.

However, Unity isn’t the only tool which is used as a leverage. The 3D models you see in this demo are almost exclusively made with photogrammetry. That is, models are automatically generated from tens of photographs of the same target. I’ve used Autodesk’s various software iterations for the task (Project Photofly, 123D Catch, Project Memento; all abandoned now). In addition to that, some of the backgrounds are made with Microsoft’s Photosynth. The actual photographs have been taken in several different locations over the years – this demo features objects and locations from at least from Belgium, Sicily and Portugal. While Autodesk is doing very good job with their software, there’s still naturally many failed tries for each successful 3D model.

The Grandma demo was our first attempt to work with this kind of content. In some ways this project is sort of continuation to that, trying to expand types of scenes. So basically this is my first attempt trying to mix and match photograph backgrounds and 3D models with lighting and post-processing tweaks. I learned it certainly is not very straightforward to make things fit visually. At first I used maybe a few hours to tweak the visuals of some scenes. But since there was serious lack of time to get all the parts in at least some form, many of the scenes were just added in with only a few minutes of time to spend on the lighting and adjusting post-processing. Unfortunately this of course shows, so I guess that somebody who professionally works with these things probably wouldn’t call this a success.

The Grandma demo was our first attempt to work with this kind of content. In some ways this project is sort of continuation to that, trying to expand types of scenes. So basically this is my first attempt trying to mix and match photograph backgrounds and 3D models with lighting and post-processing tweaks. I learned it certainly is not very straightforward to make things fit visually. At first I used maybe a few hours to tweak the visuals of some scenes. But since there was serious lack of time to get all the parts in at least some form, many of the scenes were just added in with only a few minutes of time to spend on the lighting and adjusting post-processing. Unfortunately this of course shows, so I guess that somebody who professionally works with these things probably wouldn’t call this a success.

The models aren’t the only experimental thing though. I had also been wondering how would it work if one would record camera movements by looking at the 3D content through an Oculus Rift HMD, and also move objects by using hand tracking of the LEAP Motion. Back then I had first development kit (DK1). I actually got DK2 shipped to me in middle of this project, during the final stretch of development. But it wasn’t a straight drop-in replacement for the DK1 specific code and prefabs, so I decided not to use any extra time to switch to the newer hardware during development.

Anyway, I made some simple code to record and playback the movements and rotations. For the camera I recorded the rotation and for moving its position I added simple keyboard-based movement with inertia. While the original idea was to show with camera “what somebody would actually look when seeing weird things flying in the sky”, it didn’t take long to realize that the results still were just way too chaotic. So I ended up tuning down my movements a bit when recording, while otherwise keeping the system.

Making camera tracks that way was still somewhat time consuming, as there was need for several takes. Since the time was running out, deadline becoming closer, e.g. the final scene still only has camera moving along a spline meant to be temporary. For a simpler camera like that I added bit of code which adds some delta movement from a record-based camera to retain same kind of subtle jiggle movement.

The LEAP Motion based system I tried to use for recording object movements turned out to be too much work per record to be useful enough. Getting a record done wasn’t hard, but manual adjustments like moving, scaling and cropping the data added up to bit too much. I could have modified the workflow to automate that but since I didn’t have any complex movements in mind, this system was used for only one objects movement in the end. For the rest I just made some simple specific scripts containing few lines of code to do a specific task.

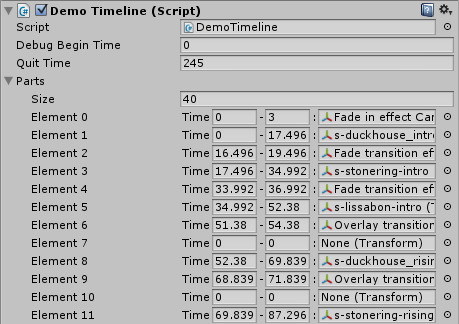

Different parts of the demo are first constructed in separate scenes under a single object in the hierarchy view, which was then just dragged to be a separate prefab. Many of the scenes also have a separate “recording scene” which contains the VR recording/playback system. The scene prefabs are dragged to be part of a “master scene” (but initially disabled so they don’t all show up). I first tried to test if I could put the parts to a timeline using the internal animation window, but I felt it was cumbersome and somehow hard to make sure things worked just at the right frame or time. So I made a simple ad hoc timeline solution instead.

The timing of the demo is controlled by a very simple timeline script which has an array of references to each possible prefab and the begin and end time for it. My usual workflow for synchronizing to music is to make notes of what’s happening in music and write down possible synch times (in millisecond precision), so I just use the time values from my notes. There could be several parts (layers) running at the same time, so fade/overlay transition effects were just parts among the actual ones. At start of the demo that script does a pre-init by enabling all parts for duration of one rendered frame (with hack of rendering black on top), so there shouldn’t be content/shader init hiccups in middle of the demo when switching parts. I didn’t bother implementing scrubbing time back and forth for this demo, so there’s only a start time for helping with debugging. The texts shown at beginning and end of demo naturally use my own Pixel-Perfect Dynamic Text available in the Asset Store.

The timing of the demo is controlled by a very simple timeline script which has an array of references to each possible prefab and the begin and end time for it. My usual workflow for synchronizing to music is to make notes of what’s happening in music and write down possible synch times (in millisecond precision), so I just use the time values from my notes. There could be several parts (layers) running at the same time, so fade/overlay transition effects were just parts among the actual ones. At start of the demo that script does a pre-init by enabling all parts for duration of one rendered frame (with hack of rendering black on top), so there shouldn’t be content/shader init hiccups in middle of the demo when switching parts. I didn’t bother implementing scrubbing time back and forth for this demo, so there’s only a start time for helping with debugging. The texts shown at beginning and end of demo naturally use my own Pixel-Perfect Dynamic Text available in the Asset Store.

About the actual effects you see in the demo, some of them are just Unity’s example effects like the water effects with some tweaks, and the few particle effects are just using Unity’s own particle system. For me this demo was more about learning and experimenting with different kinds of techniques in content production and usage. Maybe the only notable piece of custom effect code is the colorful growing structure in the sky towards end of the demo. It’s a simulation of diffusion-limited aggregation, which in itself is very simple to implement, but needs some tricks to make it fast. That particular code has its roots in my game for 5th Ludum Dare back in 2004. I later made a new optimized implementation for Unity which was fast enough to run on mobile devices, both the simulation and the glowy look without need for post-processing, and ended up adding it to that scene. The post-processing for scenes include antialiasing, vignetting, noise effect, tilt shift, sun shafts, color correction, ambient occlusion, contrast stretch and bloom, depending on the scene which ones are enabled.

About the actual effects you see in the demo, some of them are just Unity’s example effects like the water effects with some tweaks, and the few particle effects are just using Unity’s own particle system. For me this demo was more about learning and experimenting with different kinds of techniques in content production and usage. Maybe the only notable piece of custom effect code is the colorful growing structure in the sky towards end of the demo. It’s a simulation of diffusion-limited aggregation, which in itself is very simple to implement, but needs some tricks to make it fast. That particular code has its roots in my game for 5th Ludum Dare back in 2004. I later made a new optimized implementation for Unity which was fast enough to run on mobile devices, both the simulation and the glowy look without need for post-processing, and ended up adding it to that scene. The post-processing for scenes include antialiasing, vignetting, noise effect, tilt shift, sun shafts, color correction, ambient occlusion, contrast stretch and bloom, depending on the scene which ones are enabled.

The demo ended up in 6th place in the demo competition. It did stand out from the others in its own way though, and this was recognized by the Alternative Party organizers who separately awarded this demo the “Alternative demo award”.

Finally, here’s credits for the demo:

Producer, design, code – tonic (that’s me)

Music – !Cube

Additional models, design – Phlebas, Random, stRana

Thanks to – McLad, Skaven, Tug, XMunkki

Click to download (executable for Windows PC)

Or watch the YouTube video: